Download:

Abstract:

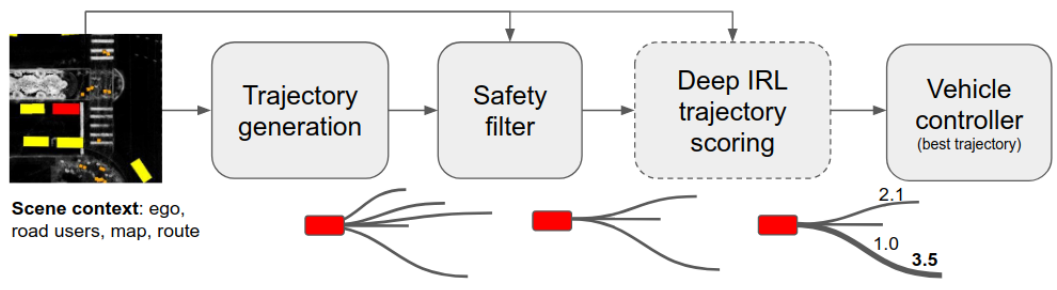

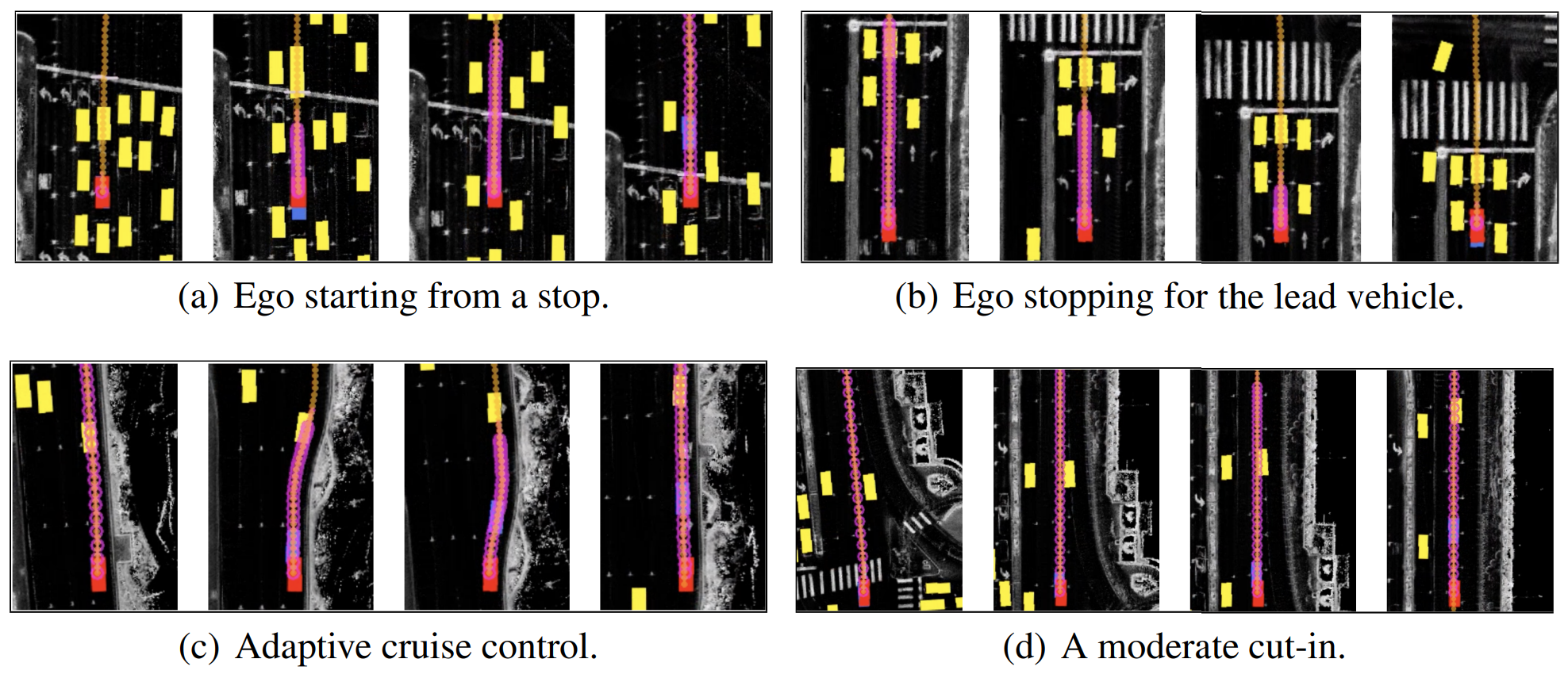

In this paper, we introduce the first published planner to drive a car in dense, urban traffic using Inverse Reinforcement Learning (IRL). Our planner, DriveIRL, generates a diverse set of trajectory proposals and scores them with a learned model. The best trajectory is tracked by our self-driving vehicle’s low-level controller. We train our trajectory scoring model on a 500+ hour real-world dataset of expert driving demonstrations in Las Vegas within the maximum entropy IRL framework. DriveIRL’s benefits include: a simple design due to only learning the trajectory scoring function, a flexible and relatively interpretable feature engineering approach, and strong real-world performance. We validated DriveIRL on the Las Vegas Strip and demonstrated fully autonomous driving in heavy traffic, including scenarios involving cut-ins, abrupt braking by the lead vehicle, and hotel pickup/dropoff zones. Our dataset, a part of nuPlan, has been released to the public to help further research in this area.

Figure 1: DriveIRL system architecture

Figure 5: Examples from simulation

Figure 6: Example from real-world scenario

Citation

Phan-Minh, T., Howington, F., Chu, T.-S., Tomov, M. S., Beaudoin, R. E., Lee, S. U., Li, N., Dicle, C., Findler, S., Suarez-Ruiz, F., Yang, B., Omari, S., Wolff, E. M. (2023). “DriveIRL: Drive in Real Life with Inverse Reinforcement Learning.” 2023 IEEE International Conference on Robotics and Automation (ICRA), pp. 1544-1550. https://doi.org/10.1109/ICRA48891.2023.10160449.

@INPROCEEDINGS{phan2023driveirl,

author={Phan-Minh, Tung and Howington, Forbes and Chu, Ting-Sheng and Tomov, Momchil S. and Beaudoin, Robert E. and Lee, Sang Uk and Li, Nanxiang and Dicle, Caglayan and Findler, Samuel and Suarez-Ruiz, Francisco and Yang, Bo and Omari, Sammy and Wolff, Eric M.},

booktitle={2023 IEEE International Conference on Robotics and Automation (ICRA)},

title={DriveIRL: Drive in Real Life with Inverse Reinforcement Learning},

year={2023},

volume={},

number={},

pages={1544-1550},

keywords={Strips;Reinforcement learning;Lead;Reliability engineering;Entropy;Trajectory;Safety;ML-based Planning;Inverse Reinforcement Learning;Real-World Deployment;Self-Driving;Autonomous Vehicles;Urban Driving;Learning from Human Driving},

doi={10.1109/ICRA48891.2023.10160449},

url={https://doi.org/10.1109/ICRA48891.2023.10160449}}