Download:

Abstract:

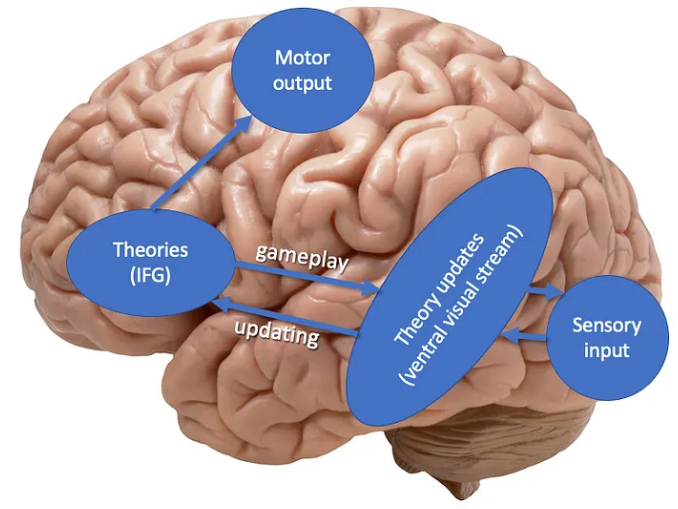

Humans learn internal models of the world that support planning and generalization in complex environments. Yet it remains unclear how such internal models are represented and learned in the brain. We approach this question using theory-based reinforcement learning, a strong form of model-based reinforcement learning in which the model is a kind of intuitive theory. We analyzed fMRI data from human participants learning to play Atari-style games. We found evidence of theory representations in prefrontal cortex and of theory updating in prefrontal cortex, occipital cortex, and fusiform gyrus. Theory updates coincided with transient strengthening of theory representations. Effective connectivity during theory updating suggests that information flows from prefrontal theory-coding regions to posterior theory-updating regions. Together, our results are consistent with a neural architecture in which top-down theory representations originating in prefrontal regions shape sensory predictions in visual areas, where factored theory prediction errors are computed and trigger bottom-up updates of the theory.

Behavioral paradigm: Atari-style video games

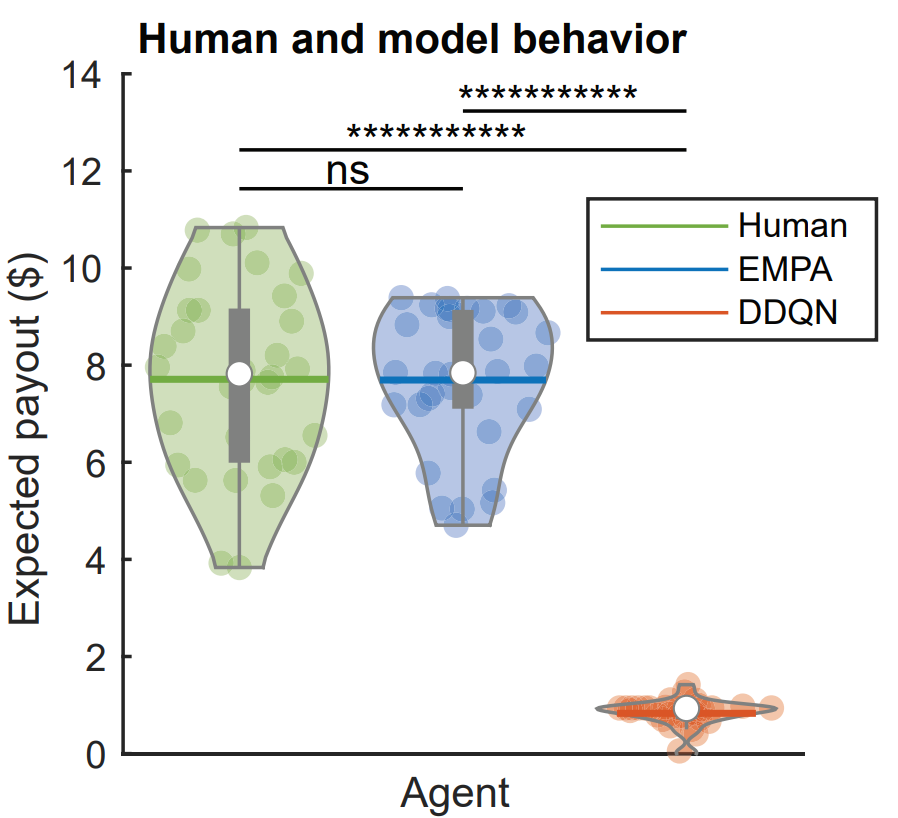

Figure 2C: Behavioral and modeling results

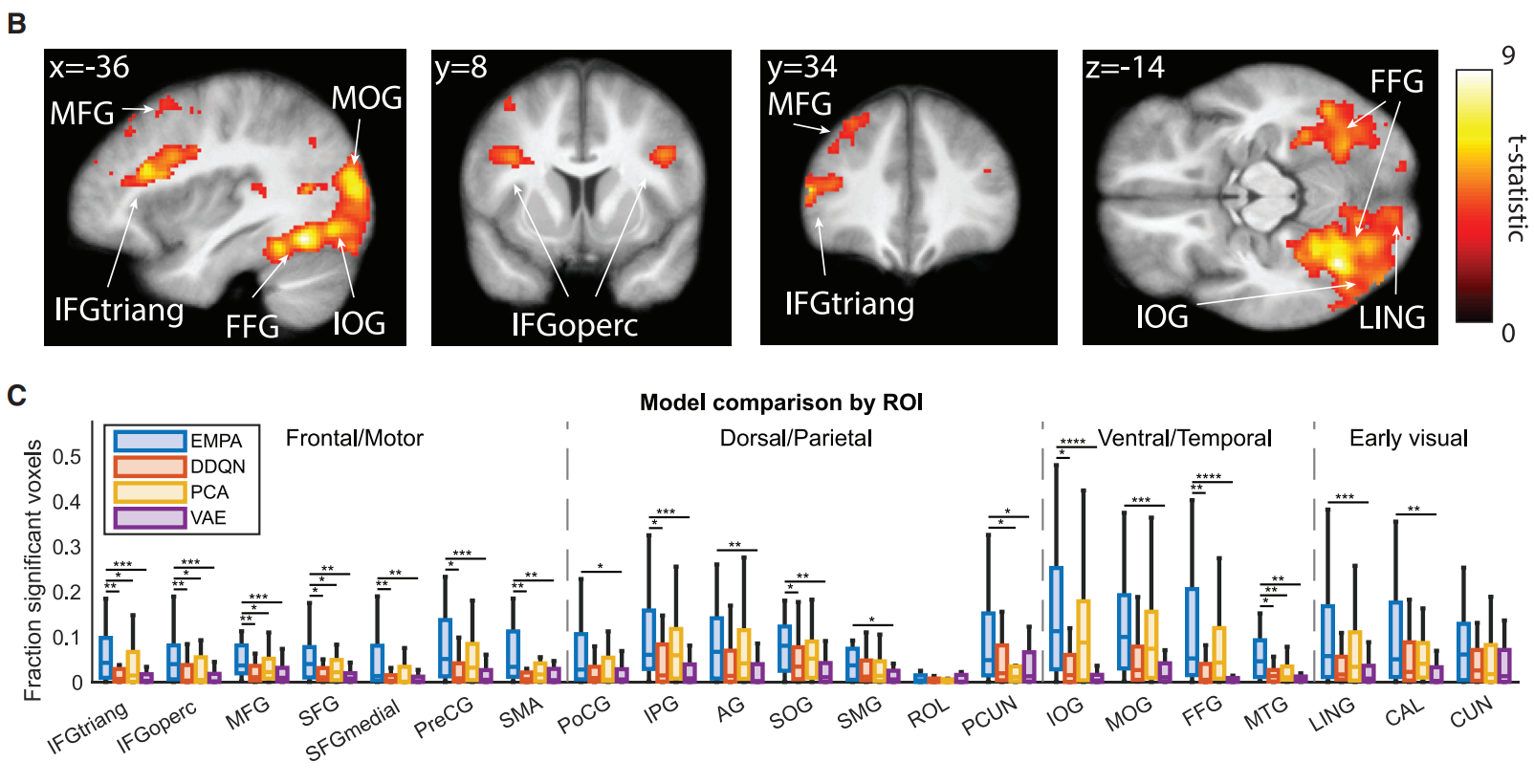

Figure 3: Theory representations in prefrontal cortex and ventral/dorsal streams

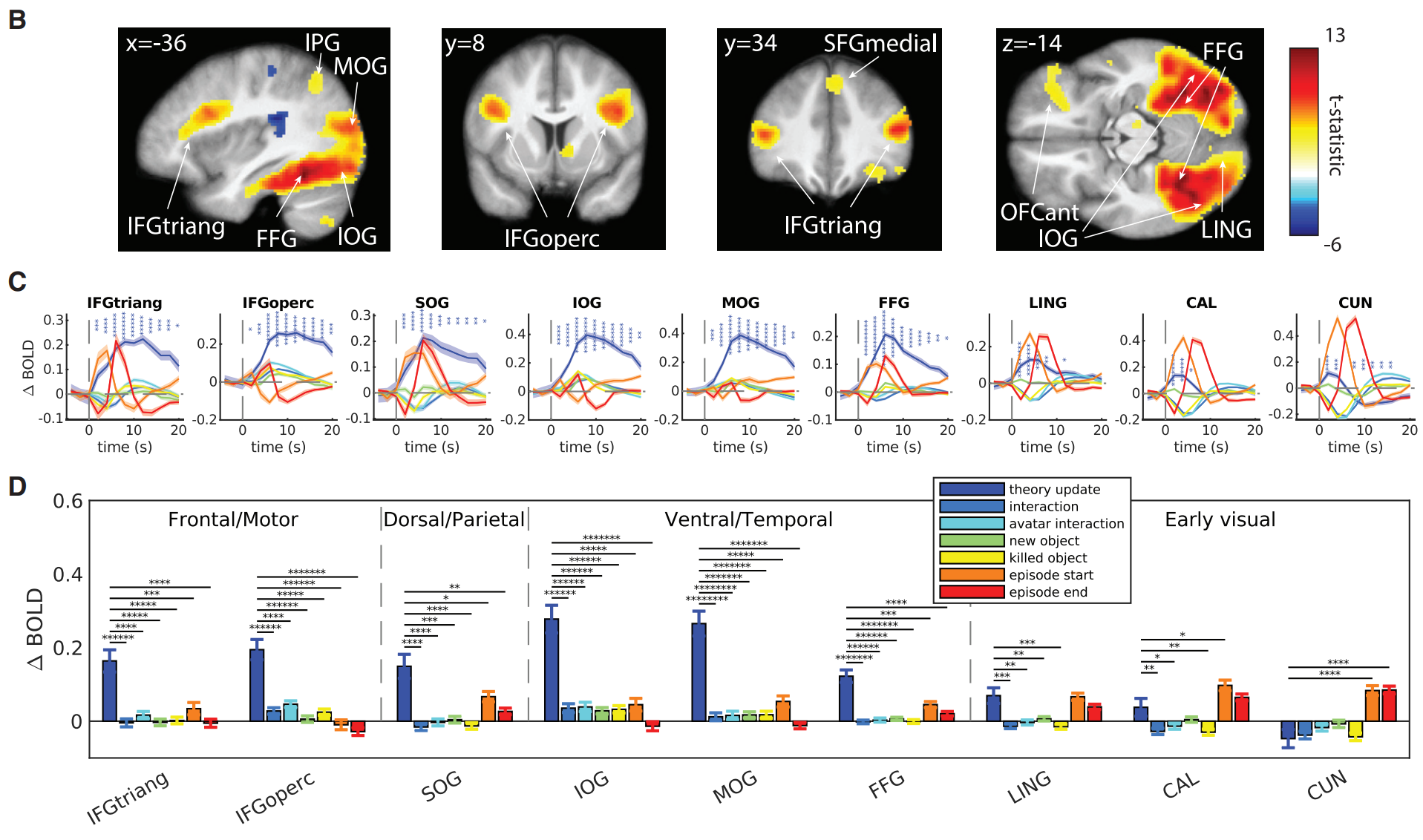

Figure 4: Theory learning signals in prefrontal cortex and ventral/dorsal streams

Neural architecture of theory-based reinforcement learning consistent with fMRI results

Citation

Tomov, M. S., Tsividis, P., Pouncy, T., Tenenbaum, J. B., Gershman, S. J. (2023). “The neural architecture of theory-based reinforcement learning.” Neuron 111 (2): 454-469. https://doi.org/10.1016/j.neuron.2023.01.023.

@article{tomov2023neural,

title={The neural architecture of theory-based reinforcement learning},

author={Tomov, Momchil S and Tsividis, Pedro A and Pouncy, Thomas and Tenenbaum, Joshua B and Gershman, Samuel J},

journal={Neuron},

volume={111},

number={8},

pages={1331--1344},

year={2023},

publisher={Elsevier},

doi={10.1016/j.neuron.2023.01.023},

url={https://doi.org/10.1016/j.neuron.2023.01.023}

}