Download:

Abstract:

We present TreeIRL, a novel planner for autonomous driving that combines Monte Carlo tree search (MCTS) and inverse reinforcement learning (IRL) to achieve state-of-the-art performance in simulation and in real-world driving. The core idea is to use MCTS to find a promising set of safe candidate trajectories and a deep IRL scoring function to select the most human-like among them. We evaluate TreeIRL against both classical and state-of-the-art planners in large-scale simulations and on 500+ miles of real-world autonomous driving in the Las Vegas metropolitan area. Test scenarios include dense urban traffic, adaptive cruise control, cut-ins, and traffic lights. TreeIRL achieves the best overall performance, striking a balance between safety, progress, comfort, and human-likeness. To our knowledge, our work is the first demonstration of MCTS-based planning on public roads and underscores the importance of evaluating planners across a diverse set of metrics and in real-world environments. TreeIRL is highly extensible and could be further improved with reinforcement learning and imitation learning, providing a framework for exploring different combinations of classical and learning-based approaches to solve the planning bottleneck in autonomous driving.

Real-world driving of TreeIRL and other planners

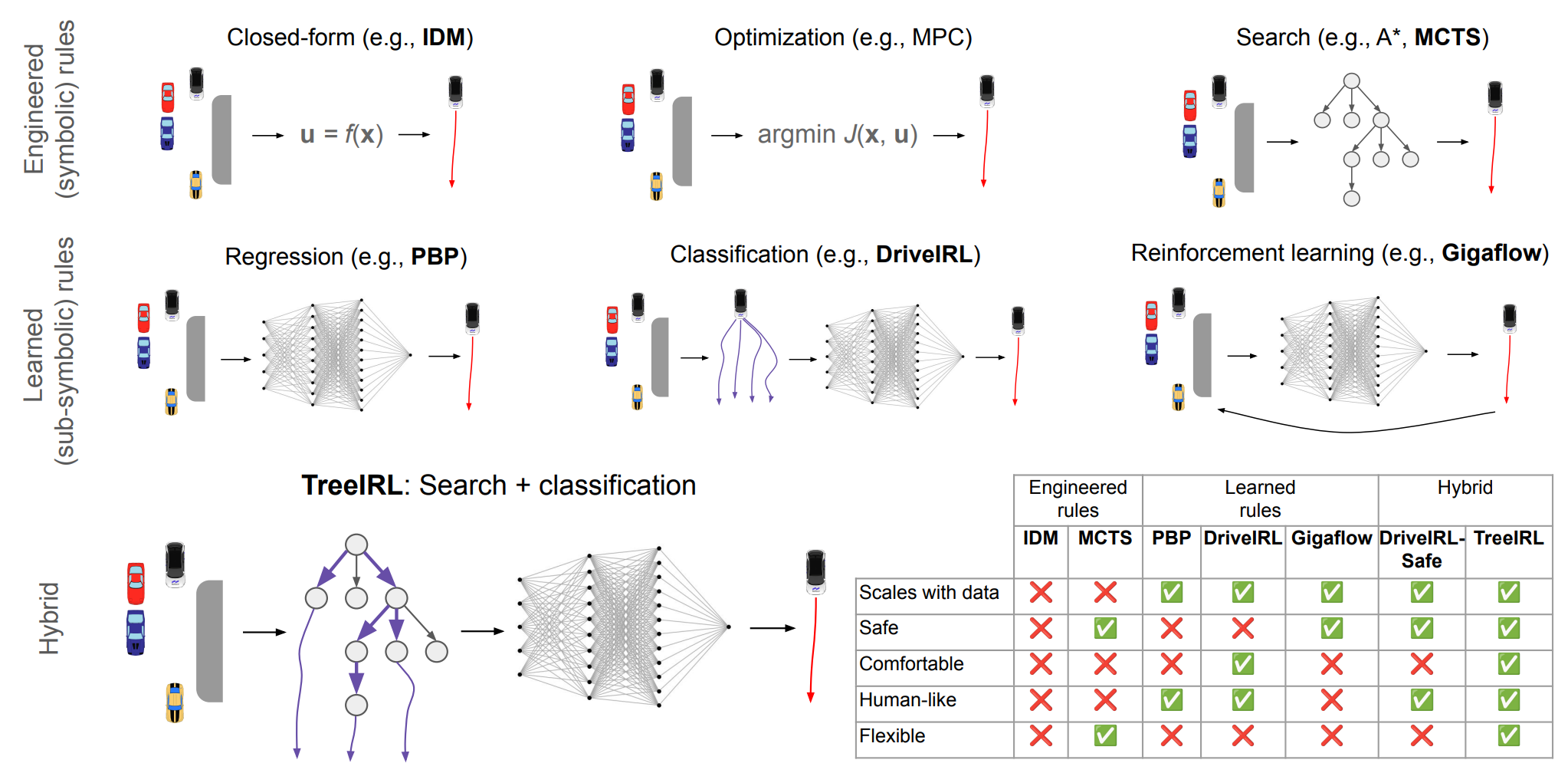

Figure 1: Landscape of motion planning approaches and summary of our results

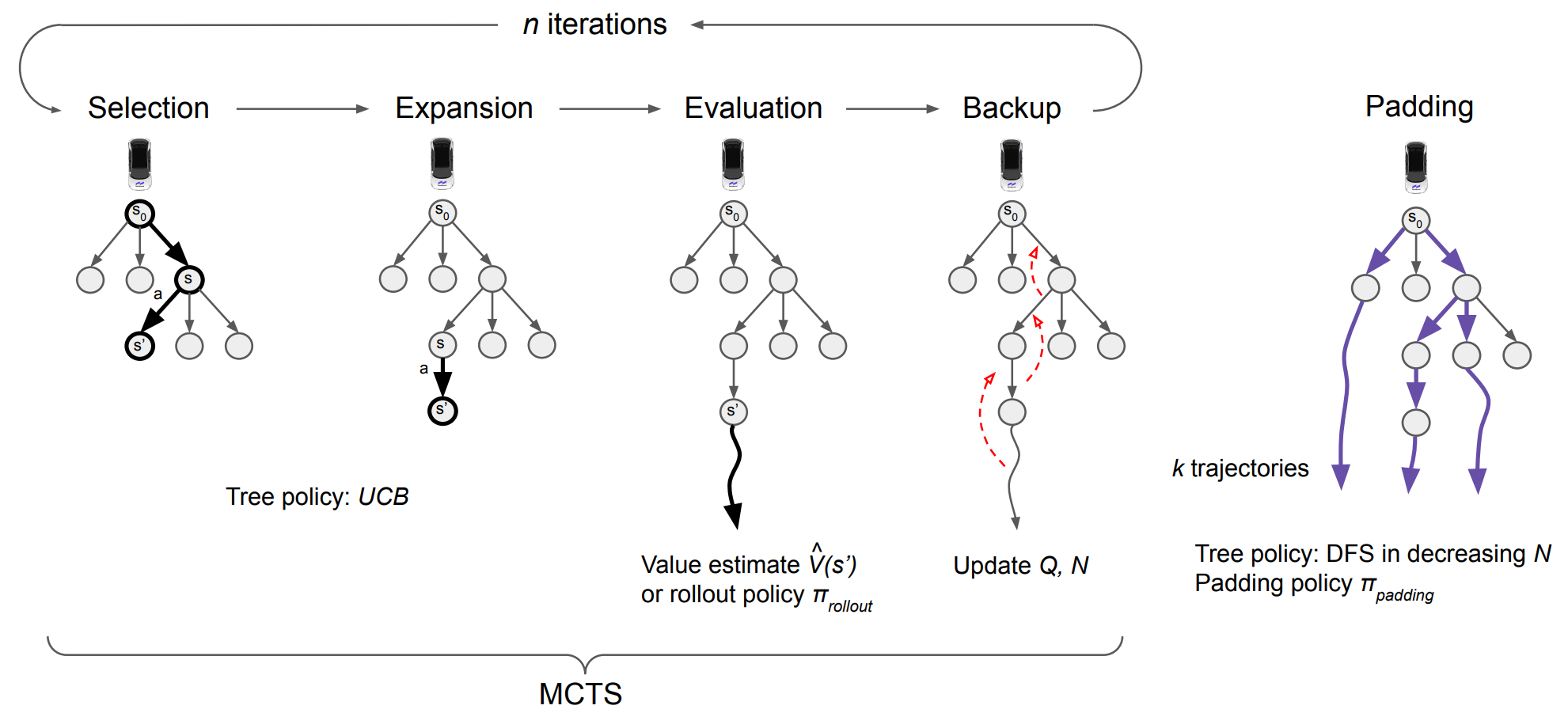

Figure 2: MCTS trajectory generator

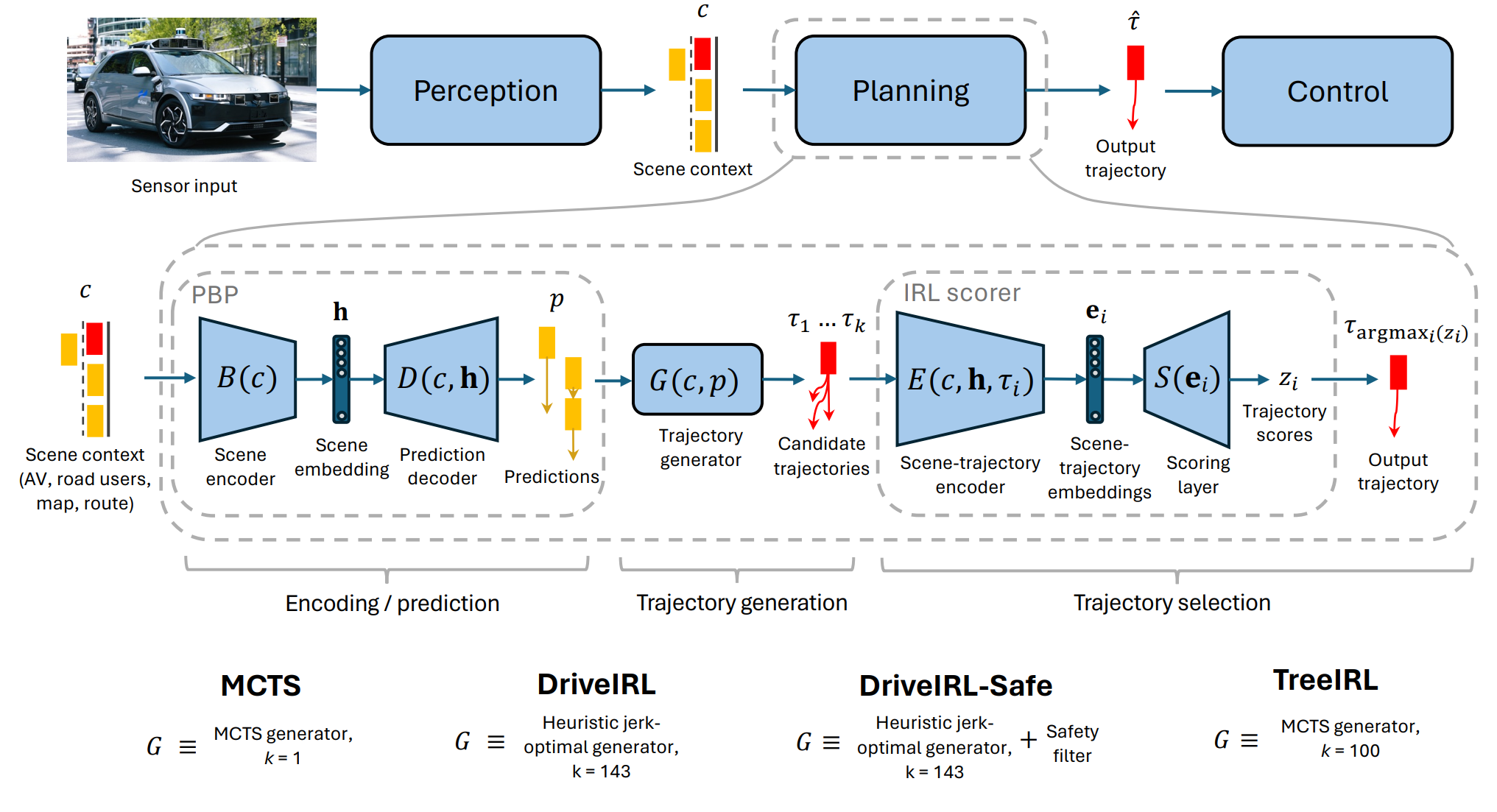

Figure 3: Planner architecture

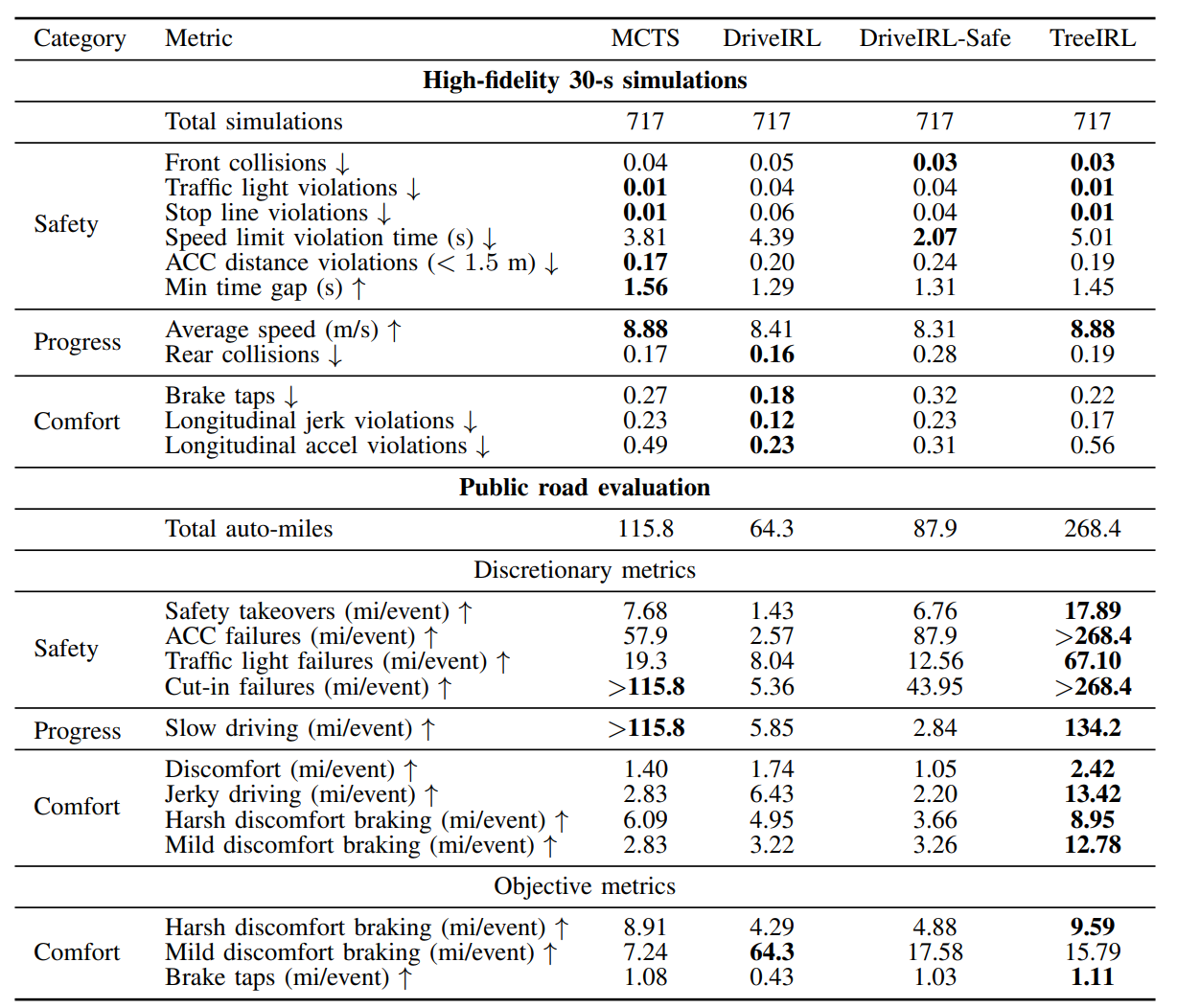

Table 6: Key results from high-fidelity simulation and on-road evaluation

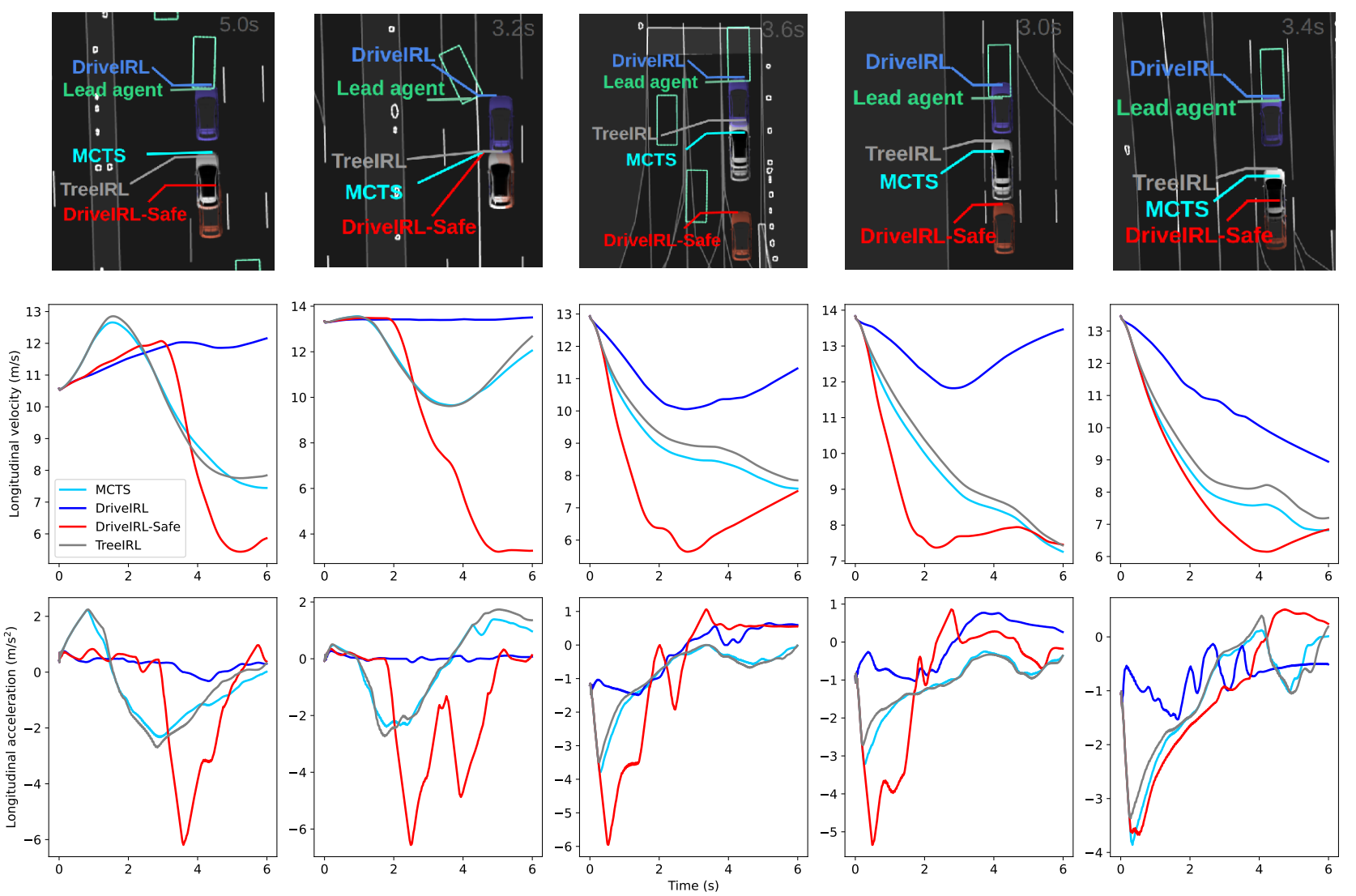

Figure 8: High-fidelity simulation examples

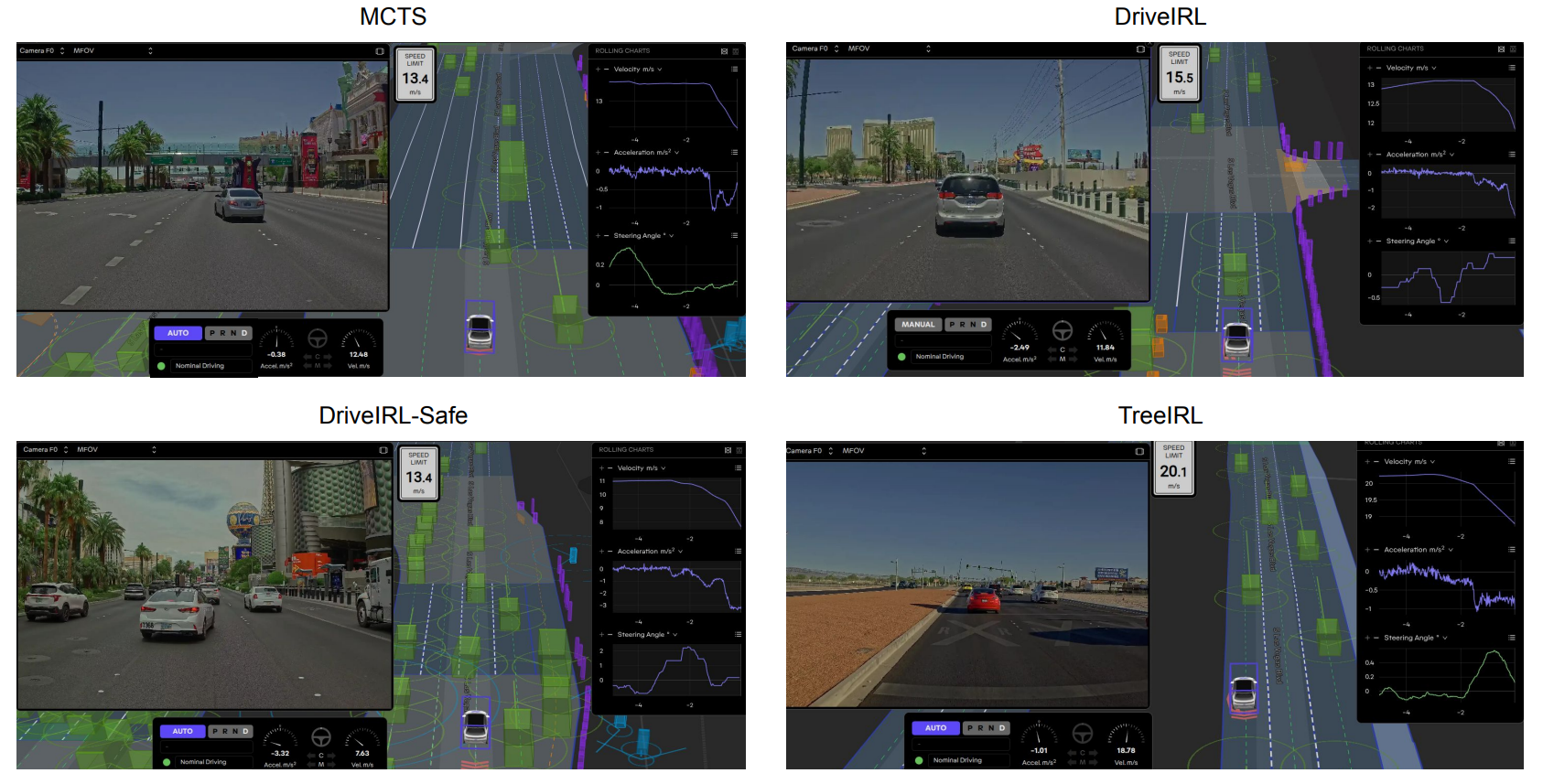

Figure 9: On-road examples

Citation

Tomov, M. S., Lee, S. U., Hendrago, H., Huh, J., Han, T., Howington, F., da Silva, R., Bernasconi, G., Heim, M., Findler, S., Ji, X., Boule, A., Napoli, M., Chen, K., Miller, J., Floor, B., Hu, Y. (2025). “TreeIRL: Safe Urban Driving with Tree Search and Inverse Reinforcement Learning.” arXiv preprint arXiv:2509.13579.

@article{tomov2025treeirl,

title={TreeIRL: Safe Urban Driving with Tree Search and Inverse Reinforcement Learning},

author={Momchil S. Tomov and Sang Uk Lee and Hansford Hendrago and Jinwook Huh and Teawon Han and Forbes Howington and Rafael da Silva and Gianmarco Bernasconi and Marc Heim and Samuel Findler and Xiaonan Ji and Alexander Boule and Michael Napoli and Kuo Chen and Jesse Miller and Boaz Floor and Yunqing Hu},

year={2025},

eprint={2509.13579},

archivePrefix={arXiv},

primaryClass={cs.RO},

doi={10.48550/arXiv.2509.13579},

url={https://doi.org/10.48550/arXiv.2509.13579}

}