Description

The ultimate test of a computational account of naturalistic decision making is whether it can perform like humans in the real world. Self-driving cars present an excellent yet unsolved testbed for this challenge. Unlike other AI applications (e.g., chatbots), they face many of the same constraints as humans, such as limited time, compute, and memory budgets. They also operate in dynamic, high-dimensional, safety-critical environments that require continuous interaction with other humans, which means they need to plan accurately and efficiently, they need to be interpretable, and they need to behave in human-like ways. Despite significant advances over the last decade, current autonomous driving technology still lacks human-level abilities, with planning and decision making – the core cognitive functions that determine driving behavior – posing the greatest challenge.

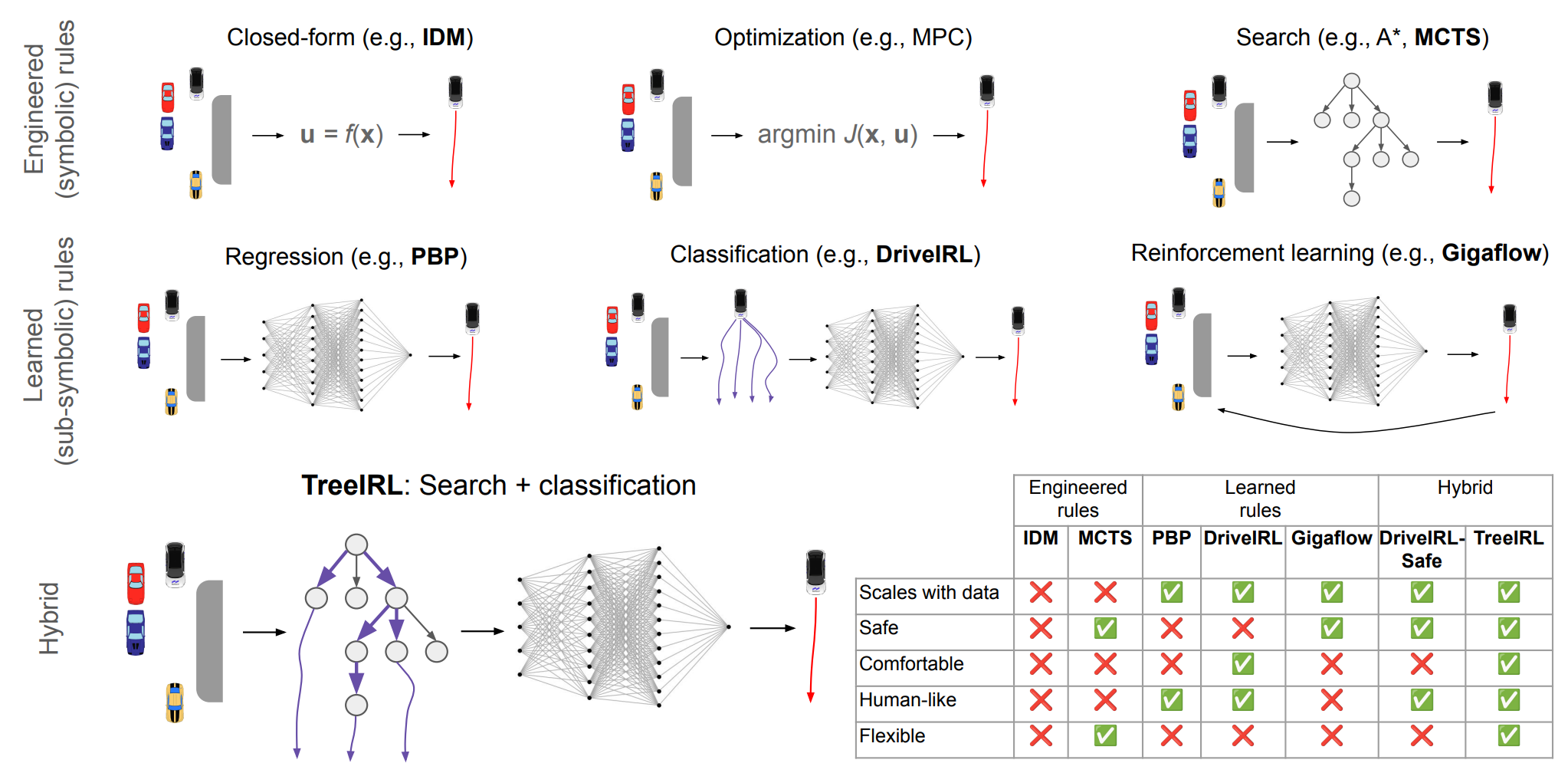

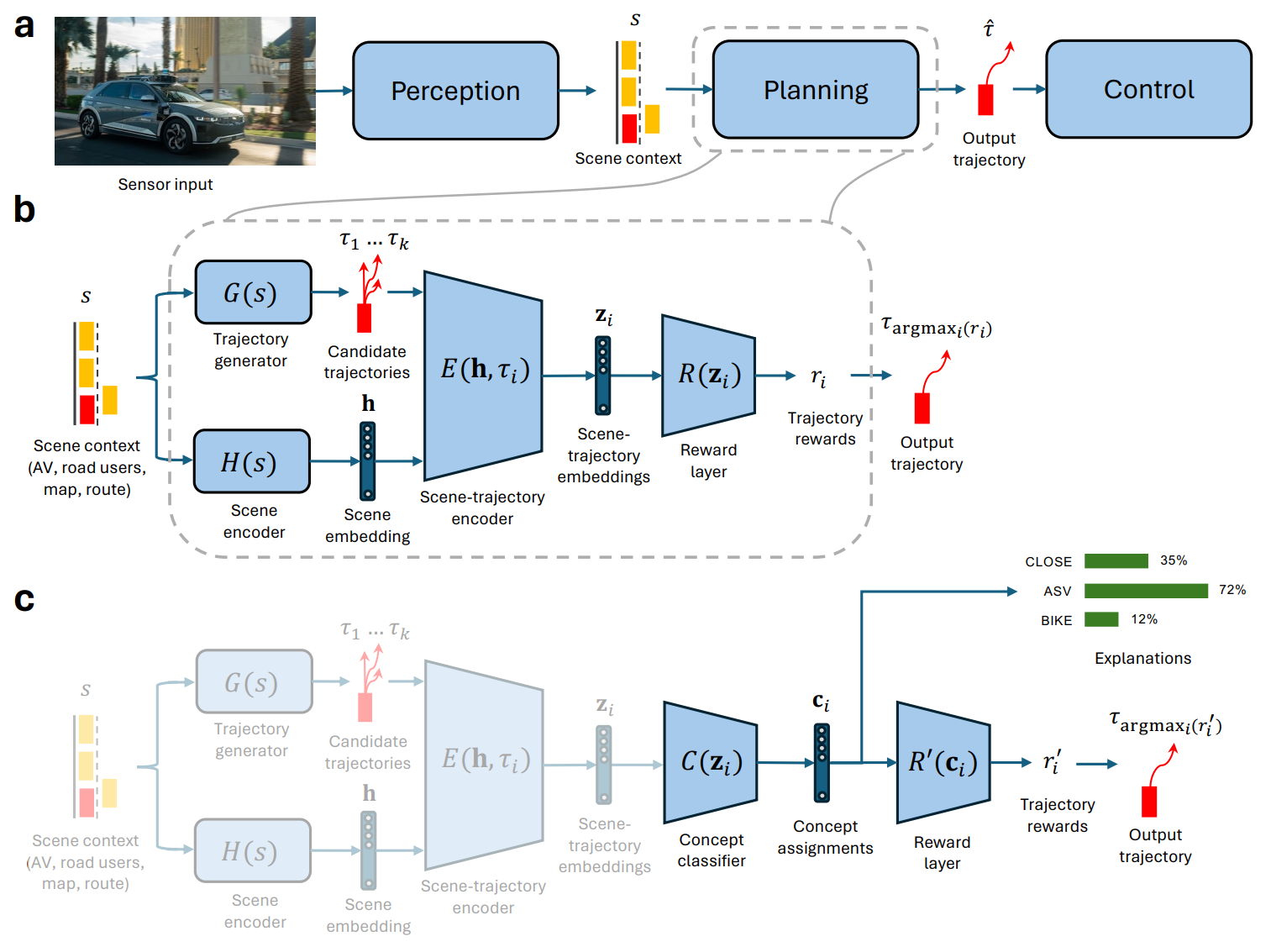

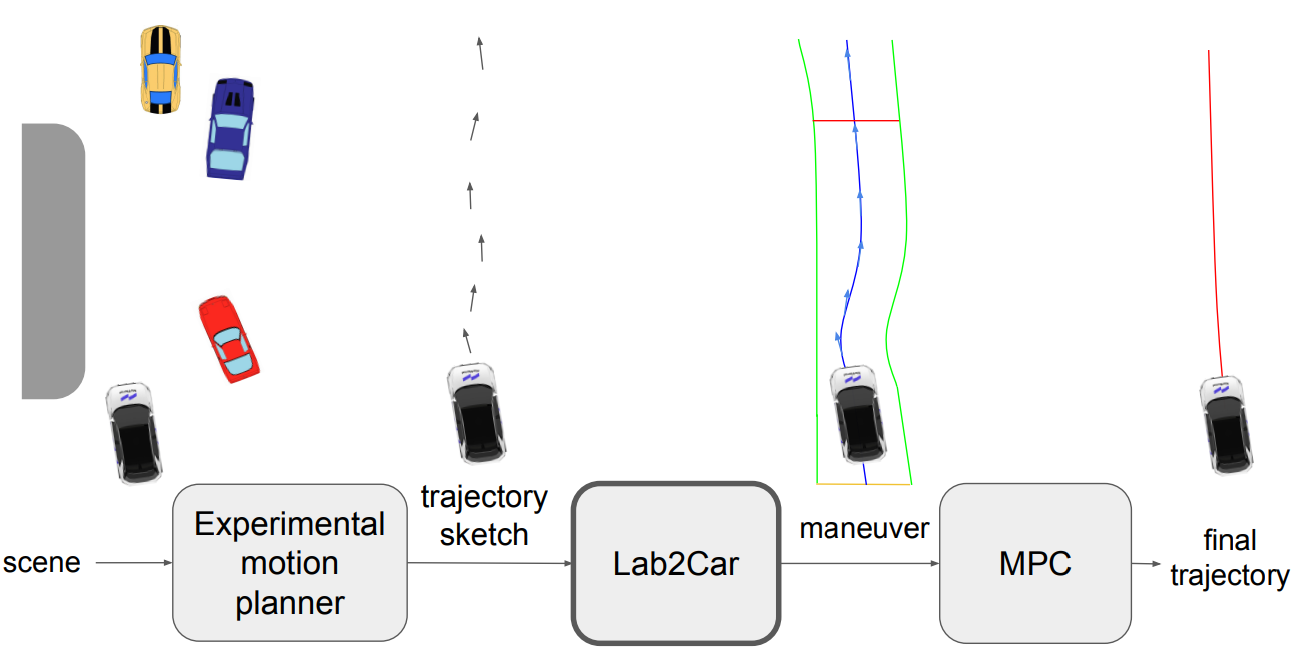

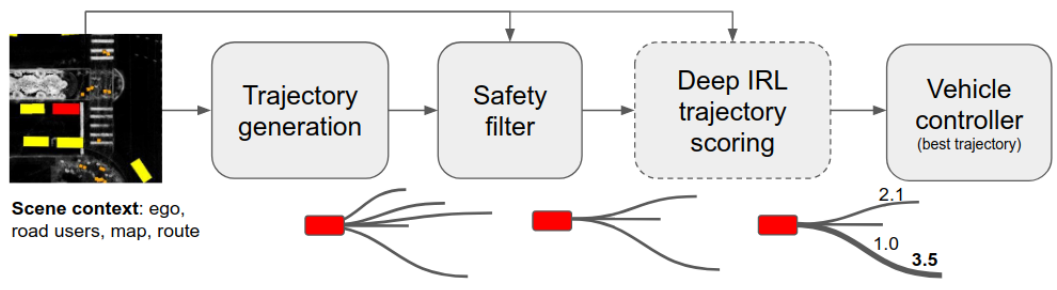

My secondary research focus is to address this gap by imbuing autonomous vehicles with inductive biases derived from our understanding of naturalistic human decision making and evaluating them against human driving in simulation and in the real world.