The neural architecture of theory-based reinforcement learning

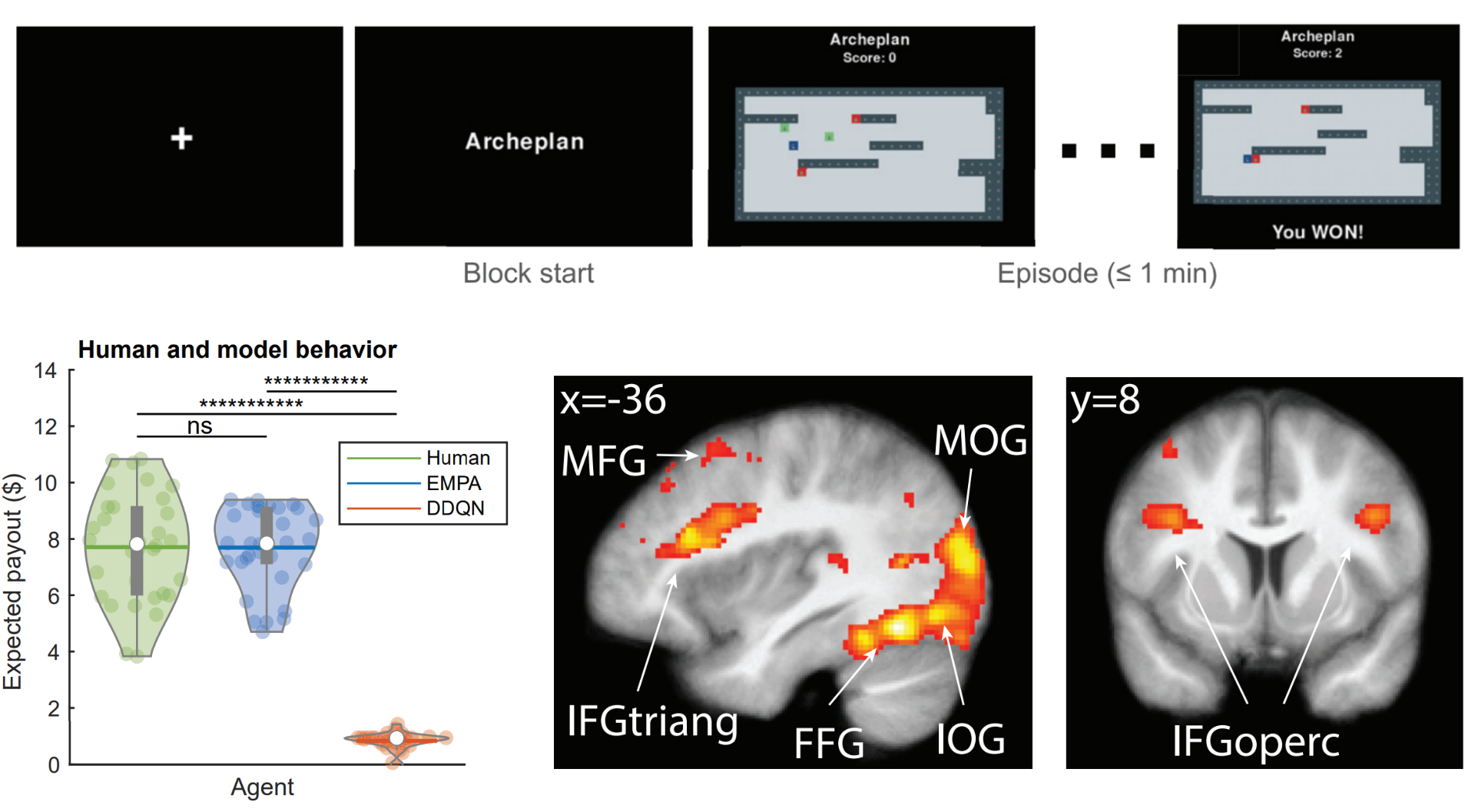

How does the brain build mental models of rich, dynamic domains, such as video games?

How does the brain build mental models of rich, dynamic domains, such as video games?

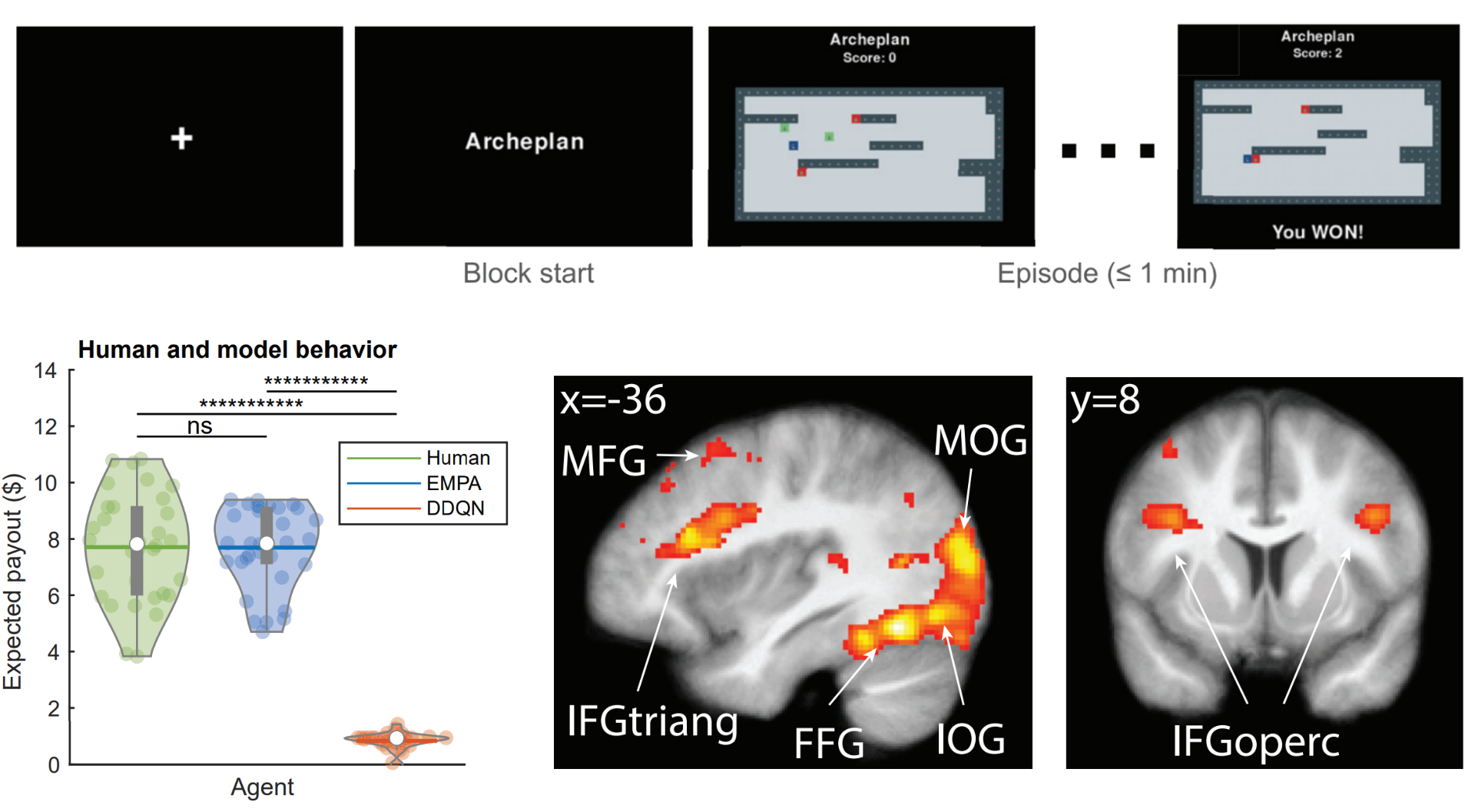

How do causal inferences shape reward-based learning in the brain?

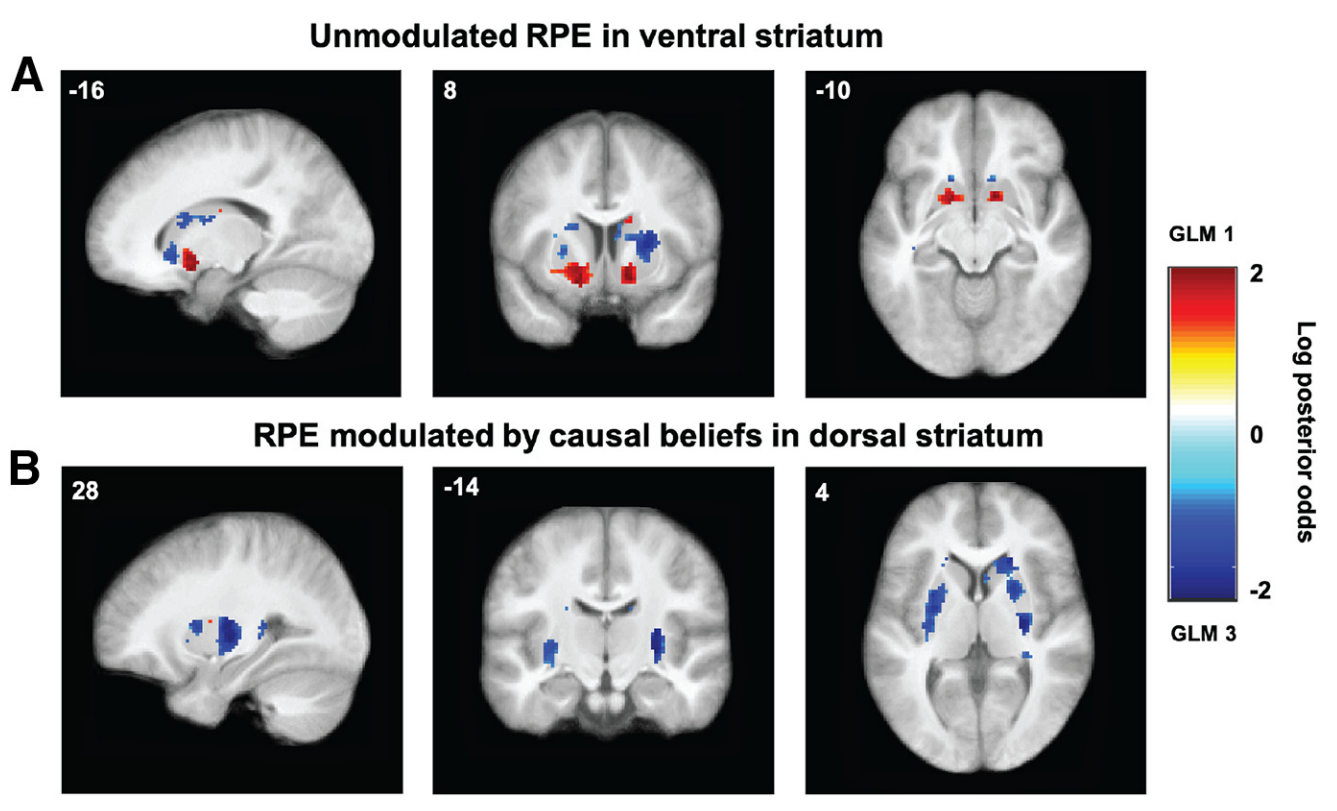

How do humans transfer knowledge across different tasks?

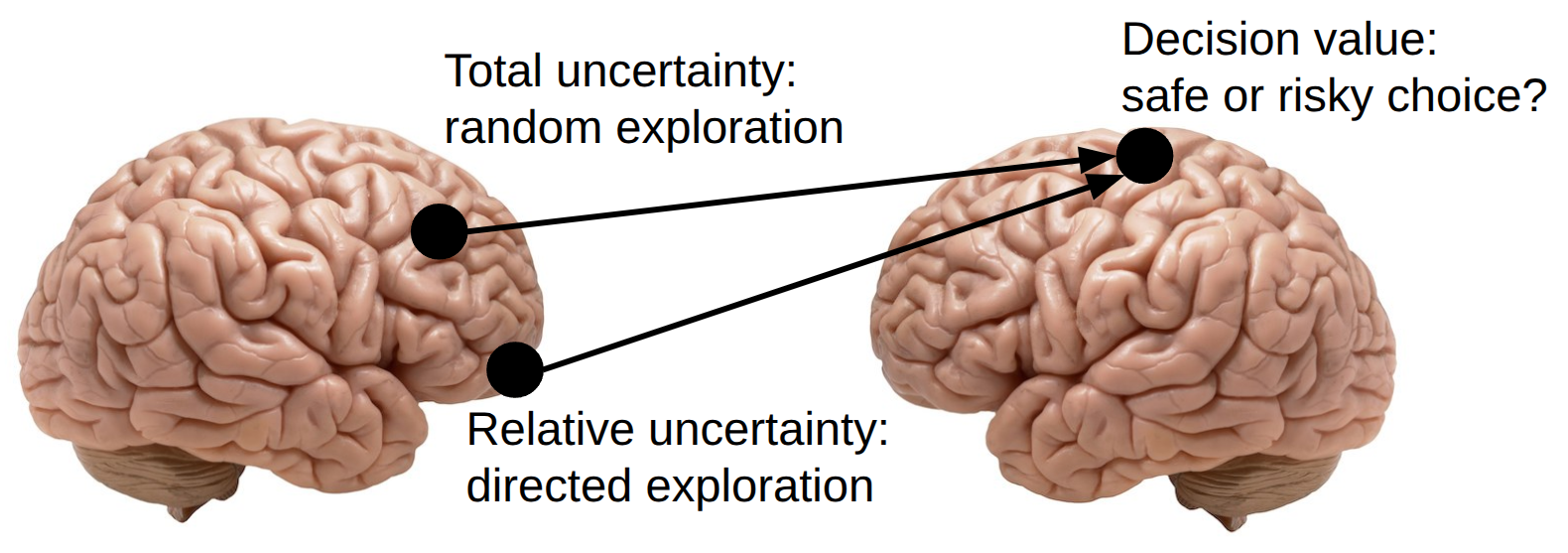

How does the brain represent different forms of uncertainty? How do those representations determine exploratory choices?

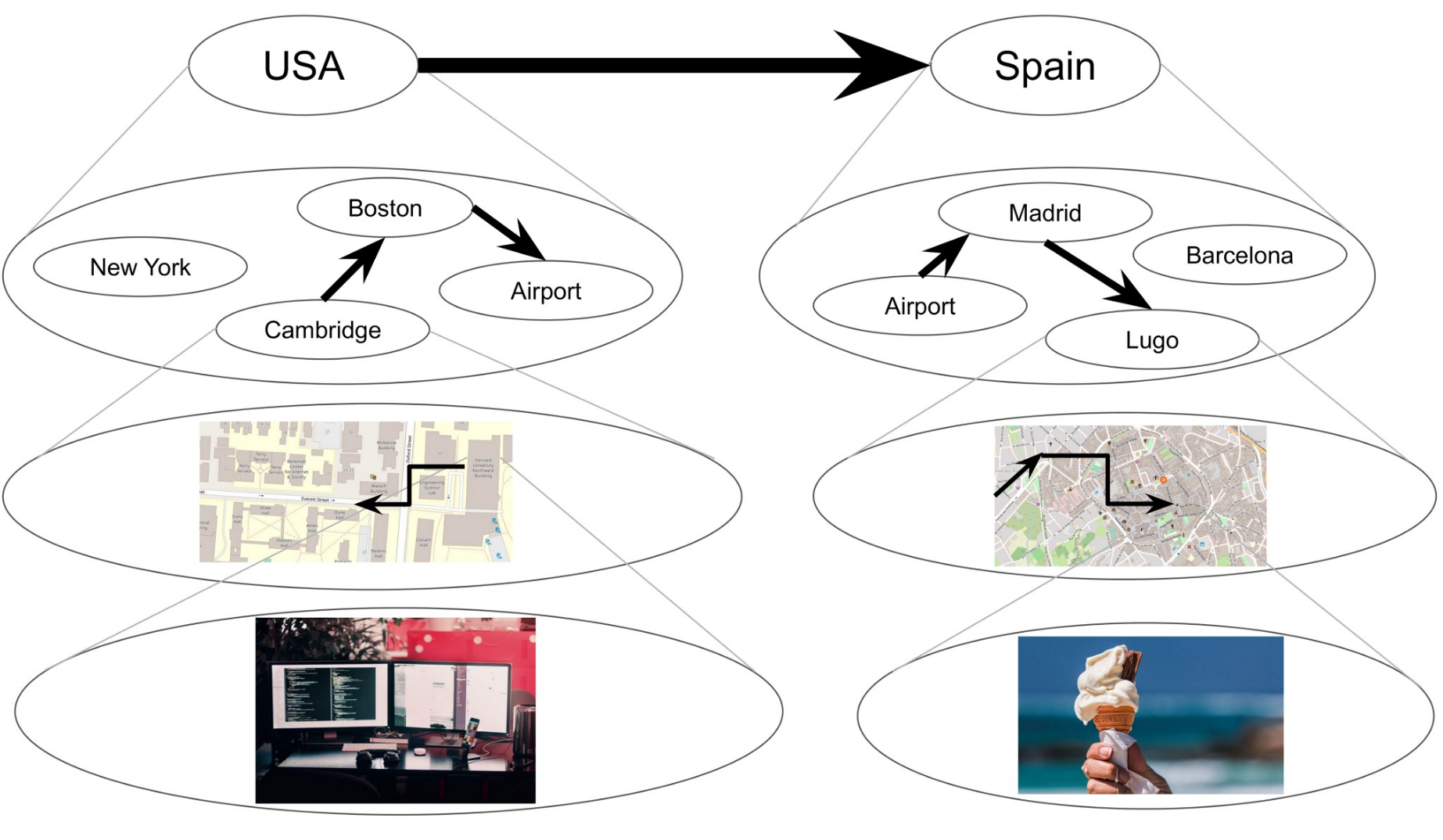

Why do humans represent their environments hierarchically? How are these hierarchical representations learned?